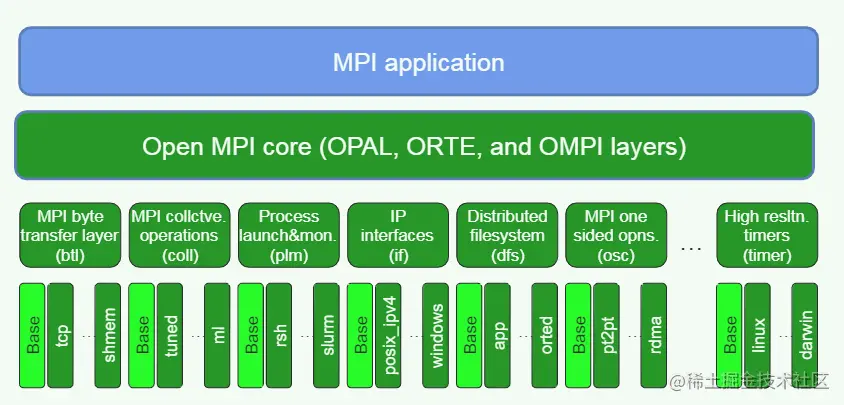

OpenMPI结构

Open MPI 联合了四种MPI的不同实现:

- LAM/MPI,

- LA/MPI (Los Alamos MPI)

- FT-MPI (Fault-Tolerant MPI)

- PACX-MPI

Architecture

Open MPI使用C语言编写,是一个非常庞大、复杂的代码库。2003的MPI 标准——MPI-2.0,定义了超过300个API接口。

之前的4个项目,每个项目都非常庞大。例如,LAM/MPI由超过1900个源码文件,代码量超过30W行。希望Open MPI尽可能的支持更多的特性、环境以及网络类型。因此Open MPI花了大量时间设计架构,主要专注于三件事情:

- 将相近的功能划分在不同的抽象层

- 使用运行时可加载的插件以及运行时参数,来选择相同接口的不同实现

- 不允许抽象影响性能

Abstraction Layer Architecture

Open MPi 可以分为三个主要的抽象层,自顶向下依次为:

- OMPI (Open MPI) (pronounced: oom-pee):

- 由 MPI standard 所定义

- 暴露给上层应用的 API,由外部应用调用

- ORTE (Open MPI Run-Time Environment) (pronounced “or-tay”):

- MPI 的 run-time system

- launch, monitor, kill individual processes

- Group individual processes into “jobs”

- 重定向stdin、stdout、stderr

- ORTE 进程管理方式:在简单的环境中,通过rsh或ssh 来launch 进程。而复杂环境(HPC专用)会有shceduler、resource manager等管理组件,面向多个用户进行公平的调度以及资源分配,ORTE支持多种管理环境,例如,orque/PBS Pro, SLURM, Oracle Grid Engine, and LSF.

- 注意 ORTE 在 5.x 版本中被移除,进程管理模块被替换成了prrte (github.com))

- MPI 的 run-time system

- OPAL (Open, Portable Access Layer) (pronounced: o-pull): OPAL 是xOmpi的最底层

- 只作用于单个进程

- 负责不同环境的可移植性

- 包含了一些通用功能(例如链表、字符串操作、debug控制等等)

在代码目录中是以project的形式存在,也就是1

2

3

4ompi/

├── ompi

├── opal

└── orte

需要注意的时,考虑到性能因素,Open MPI 有中“旁路”机制(bypass),ORTE以及OMPI层,可以绕过OPAL,直接与操作系统(甚至是硬件)进行交互。例如OMPI会直接与网卡进行交互,从而达到最大的网络性能。

Plugin Architecture

为了在 Open MPI 中使用类似但是不同效果的功能,Open MPI 设计一套被称为Modular Component Architecture (MCA)的架构。在MCA架构中,为每一个抽象层(也就是OMPI、ORTE、OPAL)定义了多个framework,这里的framework类似于其他语言语境中的接口(interface),framework对于一个功能进行了抽象,而plugin就是对于一个framework的不同实现。每个 Plugin 都是以动态链接库(DSO,dynamic shared object)的形式存在。因此run time 能够动态的加载不同的plugin。

例如下图中 btl 是一个功能传输bytes的framework,它属于OMPI层,btl framework之下又包含针对不同网络类型的实现,例如 tcp、openib (InfiniBand)、sm (shared memory)、sm-cuda (shared memory for CUDA)

PML

PML即P2P Management Layer,MPI基于这一层,基本所有的通信都是通过这一层实现的,它提供 MPI 层所需的 P2P 接口功能的 MCA 组件类型。 PML 是一个相对较薄的层,主要用于通过多种传输(字节传输层 (BTL) MCA 组件类型的实例)对消息进行分段和调度,如下所示:1

2

3

4

5

6

7------------------------------------

| MPI |

------------------------------------

| PML |

------------------------------------

| BTL (TCP) | BTL (SM) | BTL (...) |

------------------------------------

MCA 框架在库初始化期间选择单个 PML 组件。 最初,所有可用的 PML 都被加载(可能作为共享库)并调用它们的组件打开和初始化函数。 MCA 框架选择返回最高优先级的组件并关闭/卸载可能已打开的任何其他 PML 组件。

在初始化所有 MCA 组件之后,MPI/RTE 将对 PML 进行向下调用,以提供进程的初始列表(ompi_proc_t 实例)和更改通知(添加/删除)。PML 模块必须选择一组用于达到给定目的地的 BTL 组件。这些应缓存在挂在 ompi_proc_t 之外的 PML 特定数据结构上,也就是说PML层应该给它定义的一系列通信函数指针赋值,让PML层知道该调用哪些函数。然后,PML 应该应用调度算法(循环、加权分布等)来调度可用 BTL 上的消息传递。

MTL

Matching Transport Layer匹配传输层 (MTL) 为通过支持硬件/库消息匹配的设备传输 MPI 点对点消息提供设备层支持。该层与 MTL PML 组件一起使用,以在给定架构上提供最低延迟和最高带宽。 上层不提供其他 PML 接口中的功能,例如消息分段、多设备支持和 NIC 故障转移。 通常,此接口不应用于传输层支持。 相反,应该使用 BTL 接口。 BTL 接口允许在多个用户之间进行多路复用(点对点、单面等),并提供了该接口中没有的许多功能(来自任意缓冲区的 RDMA、主动消息传递、合理的固定内存缓存等)

这应该是一个接口层,负责调用底层真正通信的函数。

阻塞发送(调用不应该返回,直到用户缓冲区可以再次使用)。此调用必须满足标准 MPI 语义,如 mode 参数中所要求的。有一个特殊的模式参数,MCA_PML_BASE_SEND_COMPLETE,它需要在函数返回之前本地完成。这是对集体惯例的优化,否则会导致基于广播的集体的性能退化。

Open MPI 是围绕非阻塞操作构建的。此功能适用于在不定期触发进度功能的情况下可能发生点对点之外的进展事件(例如,集体、I/O、单面)的网络。

虽然 MPI 不允许用户指定否定标签,但它们在 Open MPI 内部用于为集体操作提供独特的渠道。因此,如果使用否定标签,MTL 不会导致错误。

非阻塞发送到对等方。此调用必须满足标准 MPI 语义,如 mode 参数中所要求的。有一个特殊的模式参数,MCA_PML_BASE_SEND_COMPLETE,它需要在请求被标记为完成之前本地完成。

PML 将处理请求的创建,将模块结构中请求的字节数直接放在 ompi_request_t 结构之后可用于 MTL。一旦可以安全地销毁请求(它已通过调用 REQUEST_FReE 或 TEST/WAIT 完成并释放),PML 将处理请求的适当销毁。当请求被标记为已完成时,MTL 应删除与请求关联的所有资源。

虽然 MPI 不允许用户指定否定标签,但它们在 Open MPI 内部用于为集体操作提供独特的渠道。因此,如果使用否定标签,MTL 不会导致错误。

OSC

One-sided Communication(OSC) 用于实现 MPI-2 标准的单向通信章节的接口。 在范围上类似于来自 MPI-1 的点对点通信的 PML。有以下几个主要函数:

- OSC component initialization:初始化给定的单边组件。 此函数应初始化任何组件级数据。组件框架不会延迟打开,因此应尽量减少在此功能期间分配的内存量。

- OSC component finalization:结束给定的单边组件。 此函数应清除在 component_init() 期间分配的任何组件级数据。 它还应该清理在组件生命周期内创建的任何数据,包括任何未完成的模块。

- OSC component query:查询给定info和comm,组件是否可以用于单边通信。 能够将组件用于窗口并不意味着该组件将被选中。 在此调用期间不应修改 win 参数,并且不应分配与此窗口关联的内存。

- OSC component select:已选择此组件来为给定窗口提供单方面的服务。 win->w_osc_module 字段可以更新,内存可以与此窗口相关联。 该模块应在此函数返回后立即准备好使用,并且该模块负责在调用结束之前提供任何所需的集体同步。comm 是用户指定的通信器,因此适用正常的内部使用规则。 换句话说,如果您需要在窗口的生命周期内进行通信,则应在此函数期间调用 comm_dup()。

MPI_Init

1 |

|

ompi_mpi_init是真正mpi初始化的函数。内部设计的很精细,因为要考虑很多多线程同时操作的情况,在各个地方都加了锁。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327int ompi_mpi_init(int argc, char **argv, int requested, int *provided,

bool reinit_ok)

{

int ret;

char *error = NULL;

char *evar;

volatile bool active;

bool background_fence = false;

pmix_info_t info[2];

pmix_status_t rc;

OMPI_TIMING_INIT(64);

ompi_hook_base_mpi_init_top(argc, argv, requested, provided);

/* Ensure that we were not already initialized or finalized. */

int32_t expected = OMPI_MPI_STATE_NOT_INITIALIZED;

int32_t desired = OMPI_MPI_STATE_INIT_STARTED;

opal_atomic_wmb(); // 内存同步?

if (!opal_atomic_compare_exchange_strong_32(&ompi_mpi_state, &expected,

desired)) {

// 此内置函数实现了原子比较和交换操作。这会将 ompi_mpi_state 的内容与 expected 的内容进行比较。

// 如果相等,则该操作是将 desired 写入 ompi_mpi_state。

// 如果它们不相等,操作是读取和 ompi_mpi_state 写入 expected。

// 避免多个进程/线程同时修改当前MPI状态

// If we failed to atomically transition ompi_mpi_state from

// NOT_INITIALIZED to INIT_STARTED, then someone else already

// did that, and we should return.

if (expected >= OMPI_MPI_STATE_FINALIZE_STARTED) {

opal_show_help("help-mpi-runtime.txt",

"mpi_init: already finalized", true);

return MPI_ERR_OTHER;

} else if (expected >= OMPI_MPI_STATE_INIT_STARTED) {

// In some cases (e.g., oshmem_shmem_init()), we may call

// ompi_mpi_init() multiple times. In such cases, just

// silently return successfully once the initializing

// thread has completed.

if (reinit_ok) {

while (ompi_mpi_state < OMPI_MPI_STATE_INIT_COMPLETED) {

usleep(1);

}

return MPI_SUCCESS;

}

opal_show_help("help-mpi-runtime.txt",

"mpi_init: invoked multiple times", true);

return MPI_ERR_OTHER;

}

}

/* deal with OPAL_PREFIX to ensure that an internal PMIx installation

* is also relocated if necessary */

if (NULL != (evar = getenv("OPAL_PREFIX"))) {

opal_setenv("PMIX_PREFIX", evar, true, &environ);

}

ompi_mpi_thread_level(requested, provided); // 设置线程级别

ret = ompi_mpi_instance_init (*provided, &ompi_mpi_info_null.info.super, MPI_ERRORS_ARE_FATAL, &ompi_mpi_instance_default);

// 创建一个新的MPI实例,

if (OPAL_UNLIKELY(OMPI_SUCCESS != ret)) {

error = "ompi_mpi_init: ompi_mpi_instance_init failed";

goto error;

}

ompi_hook_base_mpi_init_top_post_opal(argc, argv, requested, provided);

/* initialize communicator subsystem,

communicator MPI_COMM_WORLD and MPI_COMM_SELF

构建通信域结构体,保存进程数信息

通过ompi_group_translate_ranks函数得到rank

通过遍历找到通信域内与本进程对应的rank么

*/

if (OMPI_SUCCESS != (ret = ompi_comm_init_mpi3 ())) {

error = "ompi_mpi_init: ompi_comm_init_mpi3 failed";

goto error;

}

/* Bozo argument check */

if (NULL == argv && argc > 1) {

ret = OMPI_ERR_BAD_PARAM;

error = "argc > 1, but argv == NULL";

goto error;

}

/* if we were not externally started, then we need to setup

* some envars so the MPI_INFO_ENV can get the cmd name

* and argv (but only if the user supplied a non-NULL argv!), and

* the requested thread level

*/

if (NULL == getenv("OMPI_COMMAND") && NULL != argv && NULL != argv[0]) {

opal_setenv("OMPI_COMMAND", argv[0], true, &environ);

}

if (NULL == getenv("OMPI_ARGV") && 1 < argc) {

char *tmp;

tmp = opal_argv_join(&argv[1], ' ');

opal_setenv("OMPI_ARGV", tmp, true, &environ);

free(tmp);

}

if (OMPI_TIMING_ENABLED && !opal_pmix_base_async_modex &&

opal_pmix_collect_all_data && !ompi_singleton) {

if (PMIX_SUCCESS != (rc = PMIx_Fence(NULL, 0, NULL, 0))) {

ret = opal_pmix_convert_status(rc);

error = "timing: pmix-barrier-1 failed";

goto error;

}

OMPI_TIMING_NEXT("pmix-barrier-1");

if (PMIX_SUCCESS != (rc = PMIx_Fence(NULL, 0, NULL, 0))) {

ret = opal_pmix_convert_status(rc);

error = "timing: pmix-barrier-2 failed";

goto error;

}

OMPI_TIMING_NEXT("pmix-barrier-2");

}

if (!ompi_singleton) {

if (opal_pmix_base_async_modex) {

/* if we are doing an async modex, but we are collecting all

* data, then execute the non-blocking modex in the background.

* All calls to modex_recv will be cached until the background

* modex completes. If collect_all_data is false, then we skip

* the fence completely and retrieve data on-demand from the

* source node.

*/

if (opal_pmix_collect_all_data) {

/* execute the fence_nb in the background to collect

* the data */

background_fence = true;

active = true;

OPAL_POST_OBJECT(&active);

PMIX_INFO_LOAD(&info[0], PMIX_COLLECT_DATA, &opal_pmix_collect_all_data, PMIX_BOOL);

if( PMIX_SUCCESS != (rc = PMIx_Fence_nb(NULL, 0, NULL, 0,

fence_release,

(void*)&active))) {

ret = opal_pmix_convert_status(rc);

error = "PMIx_Fence_nb() failed";

goto error;

}

}

} else {

/* we want to do the modex - we block at this point, but we must

* do so in a manner that allows us to call opal_progress so our

* event library can be cycled as we have tied PMIx to that

* event base */

active = true;

OPAL_POST_OBJECT(&active);

PMIX_INFO_LOAD(&info[0], PMIX_COLLECT_DATA, &opal_pmix_collect_all_data, PMIX_BOOL);

rc = PMIx_Fence_nb(NULL, 0, info, 1, fence_release, (void*)&active);

if( PMIX_SUCCESS != rc) {

ret = opal_pmix_convert_status(rc);

error = "PMIx_Fence() failed";

goto error;

}

/* cannot just wait on thread as we need to call opal_progress */

OMPI_LAZY_WAIT_FOR_COMPLETION(active);

}

}

OMPI_TIMING_NEXT("modex");

// 把当前这两个通信域加进来

MCA_PML_CALL(add_comm(&ompi_mpi_comm_world.comm));

MCA_PML_CALL(add_comm(&ompi_mpi_comm_self.comm));

// 这是fault tolerant相关的结构

/* initialize the fault tolerant infrastructure (revoke, detector,

* propagator) */

if( ompi_ftmpi_enabled ) {

const char *evmethod;

rc = ompi_comm_rbcast_init();

if( OMPI_SUCCESS != rc ) return rc;

rc = ompi_comm_revoke_init();

if( OMPI_SUCCESS != rc ) return rc;

rc = ompi_comm_failure_propagator_init();

if( OMPI_SUCCESS != rc ) return rc;

rc = ompi_comm_failure_detector_init();

if( OMPI_SUCCESS != rc ) return rc;

evmethod = event_base_get_method(opal_sync_event_base);

if( 0 == strcmp("select", evmethod) ) {

opal_show_help("help-mpi-ft.txt", "module:event:selectbug", true);

}

}

/*

* Dump all MCA parameters if requested

*/

if (ompi_mpi_show_mca_params) {

ompi_show_all_mca_params(ompi_mpi_comm_world.comm.c_my_rank,

ompi_process_info.num_procs,

ompi_process_info.nodename);

}

/* Do we need to wait for a debugger? */

ompi_rte_wait_for_debugger();

/* Next timing measurement */

OMPI_TIMING_NEXT("modex-barrier");

if (!ompi_singleton) {

/* if we executed the above fence in the background, then

* we have to wait here for it to complete. However, there

* is no reason to do two barriers! */

if (background_fence) {

OMPI_LAZY_WAIT_FOR_COMPLETION(active);

} else if (!ompi_async_mpi_init) {

/* wait for everyone to reach this point - this is a hard

* barrier requirement at this time, though we hope to relax

* it at a later point */

bool flag = false;

active = true;

OPAL_POST_OBJECT(&active);

PMIX_INFO_LOAD(&info[0], PMIX_COLLECT_DATA, &flag, PMIX_BOOL);

if (PMIX_SUCCESS != (rc = PMIx_Fence_nb(NULL, 0, info, 1,

fence_release, (void*)&active))) {

ret = opal_pmix_convert_status(rc);

error = "PMIx_Fence_nb() failed";

goto error;

}

OMPI_LAZY_WAIT_FOR_COMPLETION(active);

}

}

/* check for timing request - get stop time and report elapsed

time if so, then start the clock again */

OMPI_TIMING_NEXT("barrier");

/* Start setting up the event engine for MPI operations. Don't

block in the event library, so that communications don't take

forever between procs in the dynamic code. This will increase

CPU utilization for the remainder of MPI_INIT when we are

blocking on RTE-level events, but may greatly reduce non-TCP

latency. */

int old_event_flags = opal_progress_set_event_flag(0);

opal_progress_set_event_flag(old_event_flags | OPAL_EVLOOP_NONBLOCK);

/* wire up the mpi interface, if requested. Do this after the

non-block switch for non-TCP performance. Do before the

polling change as anyone with a complex wire-up is going to be

using the oob.

预先执行一些MPI send recv,建立连接?

*/

if (OMPI_SUCCESS != (ret = ompi_init_preconnect_mpi())) {

error = "ompi_mpi_do_preconnect_all() failed";

goto error;

}

/* Init coll for the comms. This has to be after dpm_base_select,

(since dpm.mark_dyncomm is not set in the communicator creation

function else), but before dpm.dyncom_init, since this function

might require collective for the CID allocation.

设置集合通信相关的函数指针

*/

if (OMPI_SUCCESS !=

(ret = mca_coll_base_comm_select(MPI_COMM_WORLD))) {

error = "mca_coll_base_comm_select(MPI_COMM_WORLD) failed";

goto error;

}

if (OMPI_SUCCESS !=

(ret = mca_coll_base_comm_select(MPI_COMM_SELF))) {

error = "mca_coll_base_comm_select(MPI_COMM_SELF) failed";

goto error;

}

/* start the failure detector */

if( ompi_ftmpi_enabled ) {

rc = ompi_comm_failure_detector_start();

if( OMPI_SUCCESS != rc ) return rc;

}

/* Check whether we have been spawned or not. We introduce that

at the very end, since we need collectives, datatypes, ptls

etc. up and running here....

此例程检查应用程序是否已由另一个 MPI 应用程序生成,或者是否已独立启动。

如果它已经产生,它建立父通信器。

由于例程必须进行通信,因此它应该是 MPI_Init 的最后一步,以确保一切都已设置好。

*/

if (OMPI_SUCCESS != (ret = ompi_dpm_dyn_init())) {

return ret;

}

/* Fall through */

error:

if (ret != OMPI_SUCCESS) {

/* Only print a message if one was not already printed */

if (NULL != error && OMPI_ERR_SILENT != ret) {

const char *err_msg = opal_strerror(ret);

opal_show_help("help-mpi-runtime.txt",

"mpi_init:startup:internal-failure", true,

"MPI_INIT", "MPI_INIT", error, err_msg, ret);

}

ompi_hook_base_mpi_init_error(argc, argv, requested, provided);

OMPI_TIMING_FINALIZE;

return ret;

}

/* All done. Wasn't that simple? */

opal_atomic_wmb();

opal_atomic_swap_32(&ompi_mpi_state, OMPI_MPI_STATE_INIT_COMPLETED);

// 原子性地设置标志位为已完成初始化

/* Finish last measurement, output results

* and clear timing structure */

OMPI_TIMING_NEXT("barrier-finish");

OMPI_TIMING_OUT;

OMPI_TIMING_FINALIZE;

ompi_hook_base_mpi_init_bottom(argc, argv, requested, provided);

return MPI_SUCCESS;

}

这里分别搞了两个communicator,分别是word和self,communicator有以下的状态,看英文就能看出来意思,通过位运算设置状态。1

2

3

4

5

6

7

8

9

10

11

12

13

MPI_Comm_rank

MPI_Comm_rank是获得进程在通信域的rank。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24int MPI_Comm_rank(MPI_Comm comm, int *rank)

{

MEMCHECKER(

memchecker_comm(comm);

);

if ( MPI_PARAM_CHECK ) {

OMPI_ERR_INIT_FINALIZE(FUNC_NAME);

// 需要检查MPI是否已经初始化完成了,MPI通信域是不是合法的通信域,rank指针是否是空指针。

// MPI-2:4.12.4 明确指出 MPI_*_C2F 和 MPI_*_F2C 函数应将 MPI_COMM_NULL 视为有效的通信器

// openmpi将 ompi_comm_invalid() 保留为原始编码——根据 MPI-1 定义,其中 MPI_COMM_NULL 是无效的通信域。

// 因此,MPI_Comm_c2f() 函数调用 ompi_comm_invalid() 但也显式检查句柄是否为 MPI_COMM_NULL。

if (ompi_comm_invalid (comm))

return OMPI_ERRHANDLER_NOHANDLE_INVOKE(MPI_ERR_COMM,

FUNC_NAME);

if ( NULL == rank )

return OMPI_ERRHANDLER_INVOKE(comm, MPI_ERR_ARG,

FUNC_NAME);

}

*rank = ompi_comm_rank((ompi_communicator_t*)comm);

return MPI_SUCCESS;

ompi_comm_rank这个函数主要是返回结构体ompi_communicator_t的变量,结构体ompi_communicator_t如下,包括了集合通信,笛卡尔结构相关的数据结构1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

struct ompi_communicator_t {

opal_infosubscriber_t super;

opal_mutex_t c_lock; /* 互斥锁,为了修改变量用的可能 */

char c_name[MPI_MAX_OBJECT_NAME]; /* 比如MPI_COMM_WORLD之类的 */

ompi_comm_extended_cid_t c_contextid;

ompi_comm_extended_cid_block_t c_contextidb;

uint32_t c_index;

int c_my_rank;

uint32_t c_flags; /* flags, e.g. intercomm,

topology, etc. */

uint32_t c_assertions; /* info assertions */

int c_id_available; /* the currently available Cid for allocation

to a child*/

int c_id_start_index; /* the starting index of the block of cids

allocated to this communicator*/

uint32_t c_epoch; /* Identifier used to differenciate between two communicators

using the same c_contextid (not at the same time, obviously) */

ompi_group_t *c_local_group;

ompi_group_t *c_remote_group; // 应该是存储了属于这个通信组的proc?

struct ompi_communicator_t *c_local_comm; /* a duplicate of the

local communicator in

case the comm is an

inter-comm*/

/* Attributes */

struct opal_hash_table_t *c_keyhash;

// 这些应该是笛卡尔结构相关的

/**< inscribing cube dimension */

int c_cube_dim;

/* Standard information about the selected topology module (or NULL

if this is not a cart, graph or dist graph communicator) */

struct mca_topo_base_module_t* c_topo;

/* index in Fortran <-> C translation array */

int c_f_to_c_index;

/*

* Place holder for the PERUSE events.

*/

struct ompi_peruse_handle_t** c_peruse_handles;

/* Error handling. This field does not have the "c_" prefix so

that the OMPI_ERRHDL_* macros can find it, regardless of whether

it's a comm, window, or file. */

ompi_errhandler_t *error_handler;

ompi_errhandler_type_t errhandler_type;

/* Hooks for PML to hang things */

struct mca_pml_comm_t *c_pml_comm;

/* Hooks for MTL to hang things */

struct mca_mtl_comm_t *c_mtl_comm;

/* Collectives module interface and data */

mca_coll_base_comm_coll_t *c_coll;

/* Non-blocking collective tag. These tags might be shared between

* all non-blocking collective modules (to avoid message collision

* between them in the case where multiple outstanding non-blocking

* collective coexists using multiple backends).

* 非阻塞的集合通信

*/

opal_atomic_int32_t c_nbc_tag;

/* instance that this comm belongs to */

ompi_instance_t* instance;

/** MPI_ANY_SOURCE Failed Group Offset - OMPI_Comm_failure_get_acked */

int any_source_offset;

/** agreement caching info for topology and previous returned decisions */

opal_object_t *agreement_specific;

/** Are MPI_ANY_SOURCE operations enabled? - OMPI_Comm_failure_ack */

bool any_source_enabled;

/** Has this communicator been revoked - OMPI_Comm_revoke() */

bool comm_revoked;

/** Force errors to collective pt2pt operations? */

bool coll_revoked;

};

typedef struct ompi_communicator_t ompi_communicator_t;

保存属于这个通信组的进程,有四种方法。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33/**

* Group structure

* Currently we have four formats for storing the process pointers that are members

* of the group.

* PList: a dense format that stores all the process pointers of the group.

* Sporadic: a sparse format that stores the ranges of the ranks from the parent group,

* that are included in the current group.

* Strided: a sparse format that stores three integers that describe a red-black pattern

* that the current group is formed from its parent group.

* Bitmap: a sparse format that maintains a bitmap of the included processes from the

* parent group. For each process that is included from the parent group

* its corresponding rank is set in the bitmap array.

*/

struct ompi_group_t {

opal_object_t super; /**< base class */

int grp_proc_count; /**< number of processes in group */

int grp_my_rank; /**< rank in group */

int grp_f_to_c_index; /**< index in Fortran <-> C translation array */

struct ompi_proc_t **grp_proc_pointers;

/**< list of pointers to ompi_proc_t structures

for each process in the group */

uint32_t grp_flags; /**< flags, e.g. freed, cannot be freed etc.*/

/** pointer to the original group when using sparse storage */

struct ompi_group_t *grp_parent_group_ptr;

union

{

struct ompi_group_sporadic_data_t grp_sporadic;

struct ompi_group_strided_data_t grp_strided;

struct ompi_group_bitmap_data_t grp_bitmap;

} sparse_data;

ompi_instance_t *grp_instance; /**< instance this group was allocated within */

};

MPI_Abort

MPI_Abort主要是打印错误信息后等待退出所有进程1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

int

ompi_mpi_abort(struct ompi_communicator_t* comm,

int errcode)

{

const char *host;

pid_t pid = 0;

/* Protection for recursive invocation */

if (have_been_invoked) {

return OMPI_SUCCESS;

}

have_been_invoked = true;

/* If MPI is initialized, we know we have a runtime nodename, so

use that. Otherwise, call opal_gethostname. */

if (ompi_rte_initialized) {

host = ompi_process_info.nodename;

} else {

host = opal_gethostname();

}

pid = getpid();

/* Should we print a stack trace? Not aggregated because they

might be different on all processes. */

if (opal_abort_print_stack) {

char **messages;

int len, i;

if (OPAL_SUCCESS == opal_backtrace_buffer(&messages, &len)) {

// 调用了linux内部的backtrace函数打印调用栈,需要#include <execinfo.h>

for (i = 0; i < len; ++i) {

fprintf(stderr, "[%s:%05d] [%d] func:%s\n", host, (int) pid,

i, messages[i]);

fflush(stderr);

}

free(messages);

} else {

/* This will print an message if it's unable to print the

backtrace, so we don't need an additional "else" clause

if opal_backtrace_print() is not supported. */

opal_backtrace_print(stderr, NULL, 1);

}

}

/* Wait for a while before aborting */

opal_delay_abort();

/* If the RTE isn't setup yet/any more, then don't even try

killing everyone. Sorry, Charlie... */

int32_t state = ompi_mpi_state;

if (!ompi_rte_initialized) {

fprintf(stderr, "[%s:%05d] Local abort %s completed successfully, but am not able to aggregate error messages, and not able to guarantee that all other processes were killed!\n",

host, (int) pid,

state >= OMPI_MPI_STATE_FINALIZE_STARTED ?

"after MPI_FINALIZE started" : "before MPI_INIT completed");

_exit(errcode == 0 ? 1 : errcode);

}

/* If OMPI is initialized and we have a non-NULL communicator,

then try to kill just that set of processes */

if (state >= OMPI_MPI_STATE_INIT_COMPLETED &&

state < OMPI_MPI_STATE_FINALIZE_PAST_COMM_SELF_DESTRUCT &&

NULL != comm) {

try_kill_peers(comm, errcode); /* kill only the specified groups, no return if it worked. */

}

/* We can fall through to here in a few cases:

1. The attempt to kill just a subset of peers via

try_kill_peers() failed.

2. MPI wasn't initialized, was already finalized, or we got a

NULL communicator.

In all of these cases, the only sensible thing left to do is to

kill the entire job. Wah wah. */

ompi_rte_abort(errcode, NULL);

/* Does not return - but we add a return to keep compiler warnings at bay*/

return 0;

}

MPI_Barrier

MPI_Barrier主要是检查参数之后调用coll_barrier。在两个进程的特例中,只有一个send-recv。

1 | int MPI_Barrier(MPI_Comm comm) |

coll_barrier应该是函数指针:1

2typedef int (*mca_coll_base_module_barrier_fn_t)

(struct ompi_communicator_t *comm, struct mca_coll_base_module_2_4_0_t *module);

函数指针可能的值有:1

2

3

4

5

6

7mca_coll_basic_barrier_inter_lin

ompi_coll_base_barrier_intra_basic_linear

mca_coll_basic_barrier_intra_log

mca_scoll_basic_barrier

mca_scoll_mpi_barrier

scoll_null_barrier

前三个是O(log(N))的,以mca_coll_basic_barrier_intra_log为例。这应该是将进程组织成树的形式,以位运算隐掉某一位来计算孩子进程号,通过send/recv空消息实现barrier。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75int

mca_coll_basic_barrier_intra_log(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int i;

int err;

int peer;

int dim;

int hibit;

int mask;

int size = ompi_comm_size(comm);

int rank = ompi_comm_rank(comm);

/* Send null-messages up and down the tree. Synchronization at the

* root (rank 0). */

dim = comm->c_cube_dim;

hibit = opal_hibit(rank, dim);

--dim;

/* Receive from children. */

for (i = dim, mask = 1 << i; i > hibit; --i, mask >>= 1) {

peer = rank | mask;

if (peer < size) {

err = MCA_PML_CALL(recv(NULL, 0, MPI_BYTE, peer,

MCA_COLL_BASE_TAG_BARRIER,

comm, MPI_STATUS_IGNORE));

if (MPI_SUCCESS != err) {

return err;

}

}

// children就是比我大的或者等于我的

}

/* Send to and receive from parent. */

if (rank > 0) {

peer = rank & ~(1 << hibit);

err =

MCA_PML_CALL(send

(NULL, 0, MPI_BYTE, peer,

MCA_COLL_BASE_TAG_BARRIER,

MCA_PML_BASE_SEND_STANDARD, comm));

if (MPI_SUCCESS != err) {

return err;

}

err = MCA_PML_CALL(recv(NULL, 0, MPI_BYTE, peer,

MCA_COLL_BASE_TAG_BARRIER,

comm, MPI_STATUS_IGNORE));

if (MPI_SUCCESS != err) {

return err;

}

// parent就是比自己小的,所以要把某一位变成0

}

/* Send to children. */

for (i = hibit + 1, mask = 1 << i; i <= dim; ++i, mask <<= 1) {

peer = rank | mask;

if (peer < size) {

err = MCA_PML_CALL(send(NULL, 0, MPI_BYTE, peer,

MCA_COLL_BASE_TAG_BARRIER,

MCA_PML_BASE_SEND_STANDARD, comm));

if (MPI_SUCCESS != err) {

return err;

}

}

}

/* All done */

return MPI_SUCCESS;

}

这个直接是调用的allreduce,可省事了。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19/*

* barrier_inter_lin

*

* Function: - barrier using O(log(N)) algorithm

* Accepts: - same as MPI_Barrier()

* Returns: - MPI_SUCCESS or error code

*/

int

mca_coll_basic_barrier_inter_lin(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int rank;

int result;

rank = ompi_comm_rank(comm);

return comm->c_coll->coll_allreduce(&rank, &result, 1, MPI_INT, MPI_MAX,

comm, comm->c_coll->coll_allreduce_module);

}

ompi_coll_base_barrier_intra_basic_linear函数是从 BASIC coll 模块复制的,它不分割消息并且是简单的实现,但是对于一些少量节点和/或小数据大小,它们与基于树的分割操作一样快,因此可以选择这个。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50int ompi_coll_base_barrier_intra_basic_linear(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int i, err, rank, size, line;

ompi_request_t** requests = NULL;

size = ompi_comm_size(comm);

if( 1 == size )

return MPI_SUCCESS;

rank = ompi_comm_rank(comm);

/* All non-root send & receive zero-length message to root. */

if (rank > 0) {

err = MCA_PML_CALL(send (NULL, 0, MPI_BYTE, 0,

MCA_COLL_BASE_TAG_BARRIER,

MCA_PML_BASE_SEND_STANDARD, comm));

if (MPI_SUCCESS != err) { line = __LINE__; goto err_hndl; }

err = MCA_PML_CALL(recv (NULL, 0, MPI_BYTE, 0,

MCA_COLL_BASE_TAG_BARRIER,

comm, MPI_STATUS_IGNORE));

if (MPI_SUCCESS != err) { line = __LINE__; goto err_hndl; }

}

/* The root collects and broadcasts the messages from all other process. */

else {

requests = ompi_coll_base_comm_get_reqs(module->base_data, size);

if( NULL == requests ) { err = OMPI_ERR_OUT_OF_RESOURCE; line = __LINE__; goto err_hndl; }

for (i = 1; i < size; ++i) {

err = MCA_PML_CALL(irecv(NULL, 0, MPI_BYTE, MPI_ANY_SOURCE,

MCA_COLL_BASE_TAG_BARRIER, comm,

&(requests[i])));

if (MPI_SUCCESS != err) { line = __LINE__; goto err_hndl; }

}

err = ompi_request_wait_all( size-1, requests+1, MPI_STATUSES_IGNORE );

if (MPI_SUCCESS != err) { line = __LINE__; goto err_hndl; }

requests = NULL; /* we're done the requests array is clean */

for (i = 1; i < size; ++i) {

err = MCA_PML_CALL(send(NULL, 0, MPI_BYTE, i,

MCA_COLL_BASE_TAG_BARRIER,

MCA_PML_BASE_SEND_STANDARD, comm));

if (MPI_SUCCESS != err) { line = __LINE__; goto err_hndl; }

}

}

/* All done */

return MPI_SUCCESS;

}

double ring方法在很多MPI算法里都有,barrier里也有double ring的实现。向左右的进程发送和接收数据。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39int ompi_coll_base_barrier_intra_doublering(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int rank, size, err = 0, line = 0, left, right;

size = ompi_comm_size(comm);

if( 1 == size )

return OMPI_SUCCESS;

rank = ompi_comm_rank(comm);

OPAL_OUTPUT((ompi_coll_base_framework.framework_output,"ompi_coll_base_barrier_intra_doublering rank %d", rank));

left = ((size+rank-1)%size);

right = ((rank+1)%size);

if (rank > 0) /* receive message from the left */

err = MCA_PML_CALL(recv((void*)NULL, 0, MPI_BYTE, left, MCA_COLL_BASE_TAG_BARRIER, comm, MPI_STATUS_IGNORE));

/* Send message to the right */

err = MCA_PML_CALL(send((void*)NULL, 0, MPI_BYTE, right, MCA_COLL_BASE_TAG_BARRIER, MCA_PML_BASE_SEND_STANDARD, comm));

/* root needs to receive from the last node */

if (rank == 0)

err = MCA_PML_CALL(recv((void*)NULL, 0, MPI_BYTE, left, MCA_COLL_BASE_TAG_BARRIER, comm, MPI_STATUS_IGNORE));

/* Allow nodes to exit */

if (rank > 0) /* post Receive from left */

err = MCA_PML_CALL(recv((void*)NULL, 0, MPI_BYTE, left, MCA_COLL_BASE_TAG_BARRIER, comm, MPI_STATUS_IGNORE));

/* send message to the right one */

err = MCA_PML_CALL(send((void*)NULL, 0, MPI_BYTE, right, MCA_COLL_BASE_TAG_BARRIER, MCA_PML_BASE_SEND_SYNCHRONOUS, comm));

/* rank 0 post receive from the last node */

if (rank == 0)

err = MCA_PML_CALL(recv((void*)NULL, 0, MPI_BYTE, left, MCA_COLL_BASE_TAG_BARRIER, comm, MPI_STATUS_IGNORE));

return MPI_SUCCESS;

}

还有一种先是把进程数调整到2的n次方,对于多余的进程先进行一次同步,再在进程之间两两交换通信,同样是根据位运算来的1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73/*

* To make synchronous, uses sync sends and sync sendrecvs

*/

int ompi_coll_base_barrier_intra_recursivedoubling(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int rank, size, adjsize, err, line, mask, remote;

size = ompi_comm_size(comm);

if( 1 == size )

return OMPI_SUCCESS;

rank = ompi_comm_rank(comm);

OPAL_OUTPUT((ompi_coll_base_framework.framework_output,

"ompi_coll_base_barrier_intra_recursivedoubling rank %d",

rank));

/* do nearest power of 2 less than size calc */

adjsize = opal_next_poweroftwo(size);

adjsize >>= 1;

/* if size is not exact power of two, perform an extra step */

if (adjsize != size) {

if (rank >= adjsize) {

/* send message to lower ranked node */

remote = rank - adjsize;

err = ompi_coll_base_sendrecv_zero(remote, MCA_COLL_BASE_TAG_BARRIER,

remote, MCA_COLL_BASE_TAG_BARRIER,

comm);

if (err != MPI_SUCCESS) { line = __LINE__; goto err_hndl;}

} else if (rank < (size - adjsize)) {

/* receive message from high level rank */

err = MCA_PML_CALL(recv((void*)NULL, 0, MPI_BYTE, rank+adjsize,

MCA_COLL_BASE_TAG_BARRIER, comm,

MPI_STATUS_IGNORE));

if (err != MPI_SUCCESS) { line = __LINE__; goto err_hndl;}

}

}

/* exchange messages */

if ( rank < adjsize ) {

mask = 0x1;

while ( mask < adjsize ) {

remote = rank ^ mask;

mask <<= 1;

if (remote >= adjsize) continue;

/* post receive from the remote node */

err = ompi_coll_base_sendrecv_zero(remote, MCA_COLL_BASE_TAG_BARRIER,

remote, MCA_COLL_BASE_TAG_BARRIER,

comm);

if (err != MPI_SUCCESS) { line = __LINE__; goto err_hndl;}

}

}

/* non-power of 2 case */

if (adjsize != size) {

if (rank < (size - adjsize)) {

/* send enter message to higher ranked node */

remote = rank + adjsize;

err = MCA_PML_CALL(send((void*)NULL, 0, MPI_BYTE, remote,

MCA_COLL_BASE_TAG_BARRIER,

MCA_PML_BASE_SEND_SYNCHRONOUS, comm));

if (err != MPI_SUCCESS) { line = __LINE__; goto err_hndl;}

}

}

return MPI_SUCCESS;

}

在不同间隔的进程之间进行交换,真的能实现barrier。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25int ompi_coll_base_barrier_intra_bruck(struct ompi_communicator_t *comm,

mca_coll_base_module_t *module)

{

int rank, size, distance, to, from, err, line = 0;

size = ompi_comm_size(comm);

if( 1 == size )

return MPI_SUCCESS;

rank = ompi_comm_rank(comm);

OPAL_OUTPUT((ompi_coll_base_framework.framework_output,

"ompi_coll_base_barrier_intra_bruck rank %d", rank));

/* exchange data with rank-2^k and rank+2^k */

for (distance = 1; distance < size; distance <<= 1) {

from = (rank + size - distance) % size;

to = (rank + distance) % size;

/* send message to lower ranked node */

err = ompi_coll_base_sendrecv_zero(to, MCA_COLL_BASE_TAG_BARRIER,

from, MCA_COLL_BASE_TAG_BARRIER,

comm);

}

return MPI_SUCCESS;

}

MPI_Bcast

bcast首先检查内存区是否不是空,再调用coll_bcast,同样是函数指针。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18int MPI_Bcast(void *buffer, int count, MPI_Datatype datatype,

int root, MPI_Comm comm)

{

/* .... 主要是检查通信域和buffer是否合法,略*/

err = comm->c_coll->coll_bcast(buffer, count, datatype, root, comm,

comm->c_coll->coll_bcast_module);

OMPI_ERRHANDLER_RETURN(err, comm, err, FUNC_NAME);

}

typedef int (*mca_coll_base_module_bcast_init_fn_t)

(void *buff,

int count,

struct ompi_datatype_t *datatype,

int root,

struct ompi_communicator_t *comm,

struct ompi_info_t *info,

ompi_request_t ** request,

struct mca_coll_base_module_2_4_0_t *module);

bcast主要以下几种:bcast相关的算法应该有:0: tuned, 1: binomial, 2: in_order_binomial, 3: binary, 4: pipeline, 5: chain, 6: linear1

2

3

4

5int ompi_coll_adapt_bcast(BCAST_ARGS);

调用

int ompi_coll_adapt_ibcast

调用

int ompi_coll_adapt_ibcast_generic

ompi_coll_adapt_ibcast_generic是底层的调用,首先创建temp_request,标明source,tag等。计算要bcast的数据的segment数,有个宏提供了一种计算段的最佳计数的通用方法(即可以适合指定 SEGSIZE 的完整数据类型的数量)。并在堆上给分配空间,以便其他函数访问。如果是根进程,则向所有子进程发送,否则向根进程接收。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235int ompi_coll_adapt_ibcast_generic(void *buff, int count, struct ompi_datatype_t *datatype, int root,

struct ompi_communicator_t *comm, ompi_request_t ** request,

mca_coll_base_module_t * module, ompi_coll_tree_t * tree,

size_t seg_size)

{

int i, j, rank, err;

/* The min of num_segs and SEND_NUM or RECV_NUM, in case the num_segs is less than SEND_NUM or RECV_NUM */

int min;

/* Number of datatype in a segment */

int seg_count = count;

/* Size of a datatype */

size_t type_size;

/* Real size of a segment */

size_t real_seg_size;

ptrdiff_t extent, lb;

/* Number of segments */

int num_segs;

mca_pml_base_send_mode_t sendmode = (mca_coll_adapt_component.adapt_ibcast_synchronous_send)

? MCA_PML_BASE_SEND_SYNCHRONOUS : MCA_PML_BASE_SEND_STANDARD;

/* The request passed outside */

ompi_coll_base_nbc_request_t *temp_request = NULL;

opal_mutex_t *mutex;

/* Store the segments which are received */

int *recv_array = NULL;

/* Record how many isends have been issued for every child */

int *send_array = NULL;

/* Atomically set up free list */

if (NULL == mca_coll_adapt_component.adapt_ibcast_context_free_list) {

opal_free_list_t* fl = OBJ_NEW(opal_free_list_t);

opal_free_list_init(fl,

sizeof(ompi_coll_adapt_bcast_context_t),

opal_cache_line_size,

OBJ_CLASS(ompi_coll_adapt_bcast_context_t),

0, opal_cache_line_size,

mca_coll_adapt_component.adapt_context_free_list_min,

mca_coll_adapt_component.adapt_context_free_list_max,

mca_coll_adapt_component.adapt_context_free_list_inc,

NULL, 0, NULL, NULL, NULL);

if( !OPAL_ATOMIC_COMPARE_EXCHANGE_STRONG_PTR((opal_atomic_intptr_t *)&mca_coll_adapt_component.adapt_ibcast_context_free_list,

&(intptr_t){0}, fl) ) {

OBJ_RELEASE(fl);

}

}

/* Set up request */

temp_request = OBJ_NEW(ompi_coll_base_nbc_request_t);

OMPI_REQUEST_INIT(&temp_request->super, false);

temp_request->super.req_state = OMPI_REQUEST_ACTIVE;

temp_request->super.req_type = OMPI_REQUEST_COLL;

temp_request->super.req_free = ompi_coll_adapt_request_free;

temp_request->super.req_status.MPI_SOURCE = 0;

temp_request->super.req_status.MPI_TAG = 0;

temp_request->super.req_status.MPI_ERROR = 0;

temp_request->super.req_status._cancelled = 0;

temp_request->super.req_status._ucount = 0;

*request = (ompi_request_t*)temp_request;

/* Set up mutex */

mutex = OBJ_NEW(opal_mutex_t);

rank = ompi_comm_rank(comm);

/* Determine number of elements sent per operation */

ompi_datatype_type_size(datatype, &type_size);

COLL_BASE_COMPUTED_SEGCOUNT(seg_size, type_size, seg_count);

ompi_datatype_get_extent(datatype, &lb, &extent);

num_segs = (count + seg_count - 1) / seg_count;

real_seg_size = (ptrdiff_t) seg_count *extent;

/* Set memory for recv_array and send_array, created on heap becasue they are needed to be accessed by other functions (callback functions) */

if (num_segs != 0) {

recv_array = (int *) malloc(sizeof(int) * num_segs);

}

if (tree->tree_nextsize != 0) {

send_array = (int *) malloc(sizeof(int) * tree->tree_nextsize);

}

/* Set constant context for send and recv call back */

ompi_coll_adapt_constant_bcast_context_t *con = OBJ_NEW(ompi_coll_adapt_constant_bcast_context_t);

con->root = root;

con->count = count;

con->seg_count = seg_count;

con->datatype = datatype;

con->comm = comm;

con->real_seg_size = real_seg_size;

con->num_segs = num_segs;

con->recv_array = recv_array;

con->num_recv_segs = 0;

con->num_recv_fini = 0;

con->send_array = send_array;

con->num_sent_segs = 0;

con->mutex = mutex;

con->request = (ompi_request_t*)temp_request;

con->tree = tree;

con->ibcast_tag = ompi_coll_base_nbc_reserve_tags(comm, num_segs);

OPAL_OUTPUT_VERBOSE((30, mca_coll_adapt_component.adapt_output,

"[%d]: Ibcast, root %d, tag %d\n", rank, root,

con->ibcast_tag));

OPAL_OUTPUT_VERBOSE((30, mca_coll_adapt_component.adapt_output,

"[%d]: con->mutex = %p, num_children = %d, num_segs = %d, real_seg_size = %d, seg_count = %d, tree_adreess = %p\n",

rank, (void *) con->mutex, tree->tree_nextsize, num_segs,

(int) real_seg_size, seg_count, (void *) con->tree));

OPAL_THREAD_LOCK(mutex);

/* If the current process is root, it sends segment to every children */

if (rank == root) {

/* Handle the situation when num_segs < SEND_NUM */

if (num_segs <= mca_coll_adapt_component.adapt_ibcast_max_send_requests) {

min = num_segs;

} else {

min = mca_coll_adapt_component.adapt_ibcast_max_send_requests;

}

/* Set recv_array, root has already had all the segments */

for (i = 0; i < num_segs; i++) {

recv_array[i] = i;

}

con->num_recv_segs = num_segs;

/* Set send_array, will send ompi_coll_adapt_ibcast_max_send_requests segments */

for (i = 0; i < tree->tree_nextsize; i++) {

send_array[i] = mca_coll_adapt_component.adapt_ibcast_max_send_requests;

}

ompi_request_t *send_req;

/* Number of datatypes in each send */

int send_count = seg_count;

for (i = 0; i < min; i++) {

if (i == (num_segs - 1)) {

send_count = count - i * seg_count;

}

for (j = 0; j < tree->tree_nextsize; j++) {

ompi_coll_adapt_bcast_context_t *context =

(ompi_coll_adapt_bcast_context_t *) opal_free_list_wait(mca_coll_adapt_component.

adapt_ibcast_context_free_list);

context->buff = (char *) buff + i * real_seg_size;

context->frag_id = i;

/* The id of peer in in children_list */

context->child_id = j;

/* Actural rank of the peer */

context->peer = tree->tree_next[j];

context->con = con;

OBJ_RETAIN(con);

char *send_buff = context->buff;

OPAL_OUTPUT_VERBOSE((30, mca_coll_adapt_component.adapt_output,

"[%d]: Send(start in main): segment %d to %d at buff %p send_count %d tag %d\n",

rank, context->frag_id, context->peer,

(void *) send_buff, send_count, con->ibcast_tag - i));

err =

MCA_PML_CALL(isend

(send_buff, send_count, datatype, context->peer,

con->ibcast_tag - i, sendmode, comm,

&send_req));

if (MPI_SUCCESS != err) {

return err;

}

/* Set send callback */

OPAL_THREAD_UNLOCK(mutex);

ompi_request_set_callback(send_req, send_cb, context);

OPAL_THREAD_LOCK(mutex);

}

}

}

/* If the current process is not root, it receives data from parent in the tree. */

else {

/* Handle the situation when num_segs < RECV_NUM */

if (num_segs <= mca_coll_adapt_component.adapt_ibcast_max_recv_requests) {

min = num_segs;

} else {

min = mca_coll_adapt_component.adapt_ibcast_max_recv_requests;

}

/* Set recv_array, recv_array is empty */

for (i = 0; i < num_segs; i++) {

recv_array[i] = 0;

}

/* Set send_array to empty */

for (i = 0; i < tree->tree_nextsize; i++) {

send_array[i] = 0;

}

/* Create a recv request */

ompi_request_t *recv_req;

/* Recevice some segments from its parent */

int recv_count = seg_count;

for (i = 0; i < min; i++) {

if (i == (num_segs - 1)) {

recv_count = count - i * seg_count;

}

ompi_coll_adapt_bcast_context_t *context =

(ompi_coll_adapt_bcast_context_t *) opal_free_list_wait(mca_coll_adapt_component.

adapt_ibcast_context_free_list);

context->buff = (char *) buff + i * real_seg_size;

context->frag_id = i;

context->peer = tree->tree_prev;

context->con = con;

OBJ_RETAIN(con);

char *recv_buff = context->buff;

OPAL_OUTPUT_VERBOSE((30, mca_coll_adapt_component.adapt_output,

"[%d]: Recv(start in main): segment %d from %d at buff %p recv_count %d tag %d\n",

ompi_comm_rank(context->con->comm), context->frag_id,

context->peer, (void *) recv_buff, recv_count,

con->ibcast_tag - i));

err =

MCA_PML_CALL(irecv

(recv_buff, recv_count, datatype, context->peer,

con->ibcast_tag - i, comm, &recv_req));

if (MPI_SUCCESS != err) {

return err;

}

/* Set receive callback */

OPAL_THREAD_UNLOCK(mutex);

ompi_request_set_callback(recv_req, recv_cb, context);

OPAL_THREAD_LOCK(mutex);

}

}

OPAL_THREAD_UNLOCK(mutex);

OPAL_OUTPUT_VERBOSE((30, mca_coll_adapt_component.adapt_output,

"[%d]: End of Ibcast\n", rank));

return MPI_SUCCESS;

}

此外还找到了如下几个:

- ompi_coll_base_bcast_intra_basic_linear:root发送给所有其他进程

- mca_coll_basic_bcast_log_intra:log复杂度的树形通信

- ompi_coll_base_bcast_intra_generic:树形发送,根节点发送给中间节点,中间节点从根节点中接收,再发送给自己的子节点,叶子节点只负责接收

- mca_coll_sm_bcast_intra:共享内存的bcast

- 找到标志,memcpy,子进程感觉到完成了,再发送给子子进程

- 对于根,一般算法是等待一组段变得可用。一旦它可用,根通过将当前操作号和使用该集合的进程数写入标志来声明该集合。

- 然后根在这组段上循环;对于每个段,它将用户缓冲区的一个片段复制到共享数据段中,然后将数据大小写入其子控制缓冲区。

- 重复该过程,直到已写入所有片段。

- 对于非根,对于每组缓冲区,它们等待直到当前操作号出现在使用标志中(即,由根写入)。

- 然后对于每个段,它们等待一个非零值出现在它们的控制缓冲区中。如果他们有孩子,他们将数据从他们父母的共享数据段复制到他们的共享数据段,并将数据大小写入他们的每个孩子的控制缓冲区。

- 然后,他们将共享的数据段中的数据复制到用户的输出缓冲区中。

- 重复该过程,直到已接收到所有片段。如果他们没有孩子,他们直接将数据从父母的共享数据段复制到用户的输出缓冲区。

- mca_coll_sync_bcast

- 加上了一些barrier

- ompi_coll_tuned_bcast_intra_dec_fixed

- 根据消息大小,进程数选择算法执行bcast

- ompi_coll_base_bcast_intra_bintree:跟ompi_coll_base_bcast_intra_generic一样,树不一样

- ompi_coll_base_bcast_intra_binomial:跟ompi_coll_base_bcast_intra_generic一样

- ompi_coll_base_bcast_intra_knomial:树的子节点数不同,如果radix=2,子节点有1,2,4,8;radix=3,子节点有3,6,9这样。

ompi_coll_base_bcast_intra_scatter_allgather:借助allgather实现bcast,例如,0和1一组,2和3一组,4和5一组,6和7一组,这样第一次就能实现每个进程里两个数据,第二次就是0,1,2,3一组,4,5,6,7一组,每个进程里4个,最后一次就每个进程里8个了。

1 | /* Time complexity: O(\alpha\log(p) + \beta*m((p-1)/p)) |

ompi_coll_base_bcast_intra_scatter_allgather_ring:跟上边的一样,不过每个进程都是跟之前的进程交换1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108/*

* Time complexity: O(\alpha(\log(p) + p) + \beta*m((p-1)/p))

* Binomial tree scatter: \alpha\log(p) + \beta*m((p-1)/p)

* Ring allgather: 2(p-1)(\alpha + m/p\beta)

*

* Example, p=8, count=8, root=0

* Binomial tree scatter Ring allgather: p - 1 steps

* 0: --+ --+ --+ [0*******] [0******7] [0*****67] [0****567] ... [01234567]

* 1: | 2| <-+ [*1******] [01******] [01*****7] [01****67] ... [01234567]

* 2: 4| <-+ --+ [**2*****] [*12*****] [012*****] [012****7] ... [01234567]

* 3: | <-+ [***3****] [**23****] [*123****] [0123****] ... [01234567]

* 4: <-+ --+ --+ [****4***] [***34***] [**234***] [*1234***] ... [01234567]

* 5: 2| <-+ [*****5**] [****45**] [***345**] [**2345**] ... [01234567]

* 6: <-+ --+ [******6*] [*****56*] [****456*] [***3456*] ... [01234567]

* 7: <-+ [*******7] [******67] [*****567] [****4567] ... [01234567]

*/

int ompi_coll_base_bcast_intra_scatter_allgather_ring(

void *buf, int count, struct ompi_datatype_t *datatype, int root,

struct ompi_communicator_t *comm, mca_coll_base_module_t *module,

uint32_t segsize)

{

int err = MPI_SUCCESS;

ptrdiff_t lb, extent;

size_t datatype_size;

MPI_Status status;

ompi_datatype_get_extent(datatype, &lb, &extent);

ompi_datatype_type_size(datatype, &datatype_size);

int comm_size = ompi_comm_size(comm);

int rank = ompi_comm_rank(comm);

int vrank = (rank - root + comm_size) % comm_size;

int recv_count = 0, send_count = 0;

int scatter_count = (count + comm_size - 1) / comm_size; /* ceil(count / comm_size) */

int curr_count = (rank == root) ? count : 0;

/* Scatter by binomial tree: receive data from parent */

int mask = 1;

while (mask < comm_size) {

if (vrank & mask) {

int parent = (rank - mask + comm_size) % comm_size;

/* Compute an upper bound on recv block size */

recv_count = count - vrank * scatter_count;

if (recv_count <= 0) {

curr_count = 0;

} else {

/* Recv data from parent */

err = MCA_PML_CALL(recv((char *)buf + (ptrdiff_t)vrank * scatter_count * extent,

recv_count, datatype, parent,

MCA_COLL_BASE_TAG_BCAST, comm, &status));

if (MPI_SUCCESS != err) { goto cleanup_and_return; }

/* Get received count */

curr_count = (int)(status._ucount / datatype_size);

}

break;

}

mask <<= 1;

}

/* Scatter by binomial tree: send data to child processes */

mask >>= 1;

while (mask > 0) {

if (vrank + mask < comm_size) {

send_count = curr_count - scatter_count * mask;

if (send_count > 0) {

int child = (rank + mask) % comm_size;

err = MCA_PML_CALL(send((char *)buf + (ptrdiff_t)scatter_count * (vrank + mask) * extent,

send_count, datatype, child,

MCA_COLL_BASE_TAG_BCAST,

MCA_PML_BASE_SEND_STANDARD, comm));

if (MPI_SUCCESS != err) { goto cleanup_and_return; }

curr_count -= send_count;

}

}

mask >>= 1;

}

/* Allgather by a ring algorithm */

int left = (rank - 1 + comm_size) % comm_size;

int right = (rank + 1) % comm_size;

int send_block = vrank;

int recv_block = (vrank - 1 + comm_size) % comm_size;

for (int i = 1; i < comm_size; i++) {

recv_count = (scatter_count < count - recv_block * scatter_count) ?

scatter_count : count - recv_block * scatter_count;

if (recv_count < 0)

recv_count = 0;

ptrdiff_t recv_offset = recv_block * scatter_count * extent;

send_count = (scatter_count < count - send_block * scatter_count) ?

scatter_count : count - send_block * scatter_count;

if (send_count < 0)

send_count = 0;

ptrdiff_t send_offset = send_block * scatter_count * extent;

err = ompi_coll_base_sendrecv((char *)buf + send_offset, send_count,

datatype, right, MCA_COLL_BASE_TAG_BCAST,

(char *)buf + recv_offset, recv_count,

datatype, left, MCA_COLL_BASE_TAG_BCAST,

comm, MPI_STATUS_IGNORE, rank);

if (MPI_SUCCESS != err) { goto cleanup_and_return; }

send_block = recv_block;

recv_block = (recv_block - 1 + comm_size) % comm_size;

}

cleanup_and_return:

return err;

}

MPI_Send

经过了一系列错误检查之后,主要是mca_pml.pml_send这个函数1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50int MPI_Send(const void *buf, int count, MPI_Datatype type, int dest,

int tag, MPI_Comm comm)

{

int rc = MPI_SUCCESS;

SPC_RECORD(OMPI_SPC_SEND, 1);

MEMCHECKER(

memchecker_datatype(type);

memchecker_call(&opal_memchecker_base_isdefined, buf, count, type);

memchecker_comm(comm);

);

if ( MPI_PARAM_CHECK ) {

OMPI_ERR_INIT_FINALIZE(FUNC_NAME);

if (ompi_comm_invalid(comm)) {

return OMPI_ERRHANDLER_NOHANDLE_INVOKE(MPI_ERR_COMM, FUNC_NAME);

} else if (count < 0) {

rc = MPI_ERR_COUNT;

} else if (tag < 0 || tag > mca_pml.pml_max_tag) {

rc = MPI_ERR_TAG;

} else if (ompi_comm_peer_invalid(comm, dest) &&

(MPI_PROC_NULL != dest)) {

rc = MPI_ERR_RANK;

} else {

OMPI_CHECK_DATATYPE_FOR_SEND(rc, type, count);

OMPI_CHECK_USER_BUFFER(rc, buf, type, count);

}

OMPI_ERRHANDLER_CHECK(rc, comm, rc, FUNC_NAME);

}

/*

* An early check, so as to return early if we are communicating with

* a failed process. This is not absolutely necessary since we will

* check for this, and other, error conditions during the completion

* call in the PML.

*/

if( OPAL_UNLIKELY(!ompi_comm_iface_p2p_check_proc(comm, dest, &rc)) ) {

OMPI_ERRHANDLER_RETURN(rc, comm, rc, FUNC_NAME);

}

if (MPI_PROC_NULL == dest) {

return MPI_SUCCESS;

}

rc = MCA_PML_CALL(send(buf, count, type, dest, tag, MCA_PML_BASE_SEND_STANDARD, comm));

OMPI_ERRHANDLER_RETURN(rc, comm, rc, FUNC_NAME);

}

pml_send函数主要有以下几个赋值:1

2

3

4

5mca_pml_cm_send

mca_pml_monitoring_send

mca_pml_ob1_send

mca_pml_ucx_send

mca_spml_ucx_send

以第一个为例,1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74__opal_attribute_always_inline__ static inline int

mca_pml_cm_send(const void *buf,

size_t count,

ompi_datatype_t* datatype,

int dst,

int tag,

mca_pml_base_send_mode_t sendmode,

ompi_communicator_t* comm)

{

int ret = OMPI_ERROR;

uint32_t flags = 0;

ompi_proc_t * ompi_proc;

if(sendmode == MCA_PML_BASE_SEND_BUFFERED) {

mca_pml_cm_hvy_send_request_t *sendreq;

MCA_PML_CM_HVY_SEND_REQUEST_ALLOC(sendreq, comm, dst, ompi_proc);

if (OPAL_UNLIKELY(NULL == sendreq)) return OMPI_ERR_OUT_OF_RESOURCE;

MCA_PML_CM_HVY_SEND_REQUEST_INIT(sendreq, ompi_proc, comm, tag, dst, datatype, sendmode, false, false, buf, count, flags);

MCA_PML_CM_HVY_SEND_REQUEST_START(sendreq, ret);

if (OPAL_UNLIKELY(OMPI_SUCCESS != ret)) {

MCA_PML_CM_HVY_SEND_REQUEST_RETURN(sendreq);

return ret;

}

ompi_request_free( (ompi_request_t**)&sendreq );

} else {

opal_convertor_t convertor;

OBJ_CONSTRUCT(&convertor, opal_convertor_t);

if (opal_datatype_is_contiguous_memory_layout(&datatype->super, count)) {

convertor.remoteArch = ompi_mpi_local_convertor->remoteArch;

convertor.flags = ompi_mpi_local_convertor->flags;

convertor.master = ompi_mpi_local_convertor->master;

convertor.local_size = count * datatype->super.size;

convertor.pBaseBuf = (unsigned char*)buf + datatype->super.true_lb;

convertor.count = count;

convertor.pDesc = &datatype->super;

/* Switches off CUDA detection if

MTL set MCA_MTL_BASE_FLAG_CUDA_INIT_DISABLE during init */

MCA_PML_CM_SWITCH_CUDA_CONVERTOR_OFF(flags, datatype, count);

convertor.flags |= flags;

/* Sets CONVERTOR_CUDA flag if CUDA buffer */

opal_convertor_prepare_for_send( &convertor, &datatype->super, count, buf );

} else

{

ompi_proc = ompi_comm_peer_lookup(comm, dst);

MCA_PML_CM_SWITCH_CUDA_CONVERTOR_OFF(flags, datatype, count);

opal_convertor_copy_and_prepare_for_send(

ompi_proc->super.proc_convertor,

&datatype->super, count, buf, flags,

&convertor);

}

ret = OMPI_MTL_CALL(send(ompi_mtl,

comm,

dst,

tag,

&convertor,

sendmode));

OBJ_DESTRUCT(&convertor);

}

return ret;

}

因为这是简单的send,所以分为两种情况,第一种是有buffer,先分配request,初始化之后等待返回。MCA_PML_CM_HVY_SEND_REQUEST_ALLOC是分配一个request,request应该是opal_free_list_wait(只包括了有多线程情况下的opal_free_list_wait_mt(fl);和无多线程情况下的opal_free_list_wait_st(fl)的调用)函数分配的,并规定了完成后的回调函数mca_pml_cm_send_request_completion。1

2

3

4

5

6

7

8

9

10

从一个栈结构里取出来一个proc1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56static inline opal_free_list_item_t *opal_free_list_wait_st(opal_free_list_t *fl)

{

opal_free_list_item_t *item = (opal_free_list_item_t *) opal_lifo_pop(&fl->super);

while (NULL == item) {

if (fl->fl_max_to_alloc <= fl->fl_num_allocated

|| OPAL_SUCCESS != opal_free_list_grow_st(fl, fl->fl_num_per_alloc, &item)) {

/* try to make progress */

opal_progress();

}

if (NULL == item) {

item = (opal_free_list_item_t *) opal_lifo_pop(&fl->super);

}

}

return item;

}

/**

* Blocking call to obtain an item from a free list.

*/

static inline opal_free_list_item_t *opal_free_list_wait_mt(opal_free_list_t *fl)

{

opal_free_list_item_t *item = (opal_free_list_item_t *) opal_lifo_pop_atomic(&fl->super);

while (NULL == item) {

if (!opal_mutex_trylock(&fl->fl_lock)) {

if (fl->fl_max_to_alloc <= fl->fl_num_allocated

|| OPAL_SUCCESS != opal_free_list_grow_st(fl, fl->fl_num_per_alloc, &item)) {

fl->fl_num_waiting++;

opal_condition_wait(&fl->fl_condition, &fl->fl_lock);

fl->fl_num_waiting--;

} else {

if (0 < fl->fl_num_waiting) {

if (1 == fl->fl_num_waiting) {

opal_condition_signal(&fl->fl_condition);

} else {

opal_condition_broadcast(&fl->fl_condition);

}

}

}

} else {

/* If I wasn't able to get the lock in the begining when I finaly grab it

* the one holding the lock in the begining already grow the list. I will

* release the lock and try to get a new element until I succeed.

*/

opal_mutex_lock(&fl->fl_lock);

}

opal_mutex_unlock(&fl->fl_lock);

if (NULL == item) {

item = (opal_free_list_item_t *) opal_lifo_pop_atomic(&fl->super);

}

}

return item;

}

回调函数mca_pml_cm_send_request_completion,主要是为了调用MCA_PML_CM_THIN_SEND_REQUEST_PML_COMPLETE的。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67void

mca_pml_cm_send_request_completion(struct mca_mtl_request_t *mtl_request)

{

mca_pml_cm_send_request_t *base_request =

(mca_pml_cm_send_request_t*) mtl_request->ompi_req;

if( MCA_PML_CM_REQUEST_SEND_THIN == base_request->req_base.req_pml_type ) {

MCA_PML_CM_THIN_SEND_REQUEST_PML_COMPLETE(((mca_pml_cm_thin_send_request_t*) base_request));

} else {

MCA_PML_CM_HVY_SEND_REQUEST_PML_COMPLETE(((mca_pml_cm_hvy_send_request_t*) base_request));

}

}

/*

* The PML has completed a send request. Note that this request

* may have been orphaned by the user or have already completed

* at the MPI level.

* This macro will never be called directly from the upper level, as it should

* only be an internal call to the PML.

*/

/*

* The PML has completed a send request. Note that this request

* may have been orphaned by the user or have already completed

* at the MPI level.

* This macro will never be called directly from the upper level, as it should

* only be an internal call to the PML.

*/

分配完之后调用MCA_PML_CM_HVY_SEND_REQUEST_INIT进行初始化,1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

初始化完成之后开始执行send-request,并释放。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

否则,如果不是buffer类型的send,首先创建一个convertor(后边看,可能是在不同架构下进行通信的转换器),如果没有异构的支持,需要考虑传输的数据是不是连续的,支持异构的话就不需要额外考虑内存连续性。OPAL_CUDA_SUPPORT考虑了cuda的特点。

ompi_comm_peer_lookup用于找到通信对方进程ompi_proc_t结构,原来找一个对方通信进程还需要加锁。它最终是调用了这个函数1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35/**

* @brief Helper function for retreiving the proc of a group member in a dense group

*

* This function exists to handle the translation of sentinel group members to real

* ompi_proc_t's. If a sentinel value is found and allocate is true then this function

* looks for an existing ompi_proc_t using ompi_proc_for_name which will allocate a

* ompi_proc_t if one does not exist. If allocate is false then sentinel values translate

* to NULL.

*/

static inline struct ompi_proc_t *ompi_group_dense_lookup (ompi_group_t *group, const int peer_id, const bool allocate)

{

ompi_proc_t *proc;

proc = group->grp_proc_pointers[peer_id];

if (OPAL_UNLIKELY(ompi_proc_is_sentinel (proc))) {

if (!allocate) {

return NULL;

}

/* replace sentinel value with an actual ompi_proc_t */

ompi_proc_t *real_proc =

(ompi_proc_t *) ompi_proc_for_name (ompi_proc_sentinel_to_name ((uintptr_t) proc));

// 在hash table里找proc

if (opal_atomic_compare_exchange_strong_ptr ((opal_atomic_intptr_t *)(group->grp_proc_pointers + peer_id),

(intptr_t *) &proc, (intptr_t) real_proc)) {

OBJ_RETAIN(real_proc);

}

proc = real_proc;

}

return proc;

}

然后这样就可以调用ompi_mtl->mtl_send,主要是这个函数ompi_mtl_psm2_send,到了MTL层。send还有一种实现是调用了fabric库的操作,这个先不看了。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55int

ompi_mtl_psm2_send(struct mca_mtl_base_module_t* mtl,

struct ompi_communicator_t* comm,

int dest,

int tag,

struct opal_convertor_t *convertor,

mca_pml_base_send_mode_t mode)

{

psm2_error_t err;

mca_mtl_psm2_request_t mtl_psm2_request;

psm2_mq_tag_t mqtag;

uint32_t flags = 0;

int ret;

size_t length;

ompi_proc_t* ompi_proc = ompi_comm_peer_lookup( comm, dest );

mca_mtl_psm2_endpoint_t* psm2_endpoint = ompi_mtl_psm2_get_endpoint (mtl, ompi_proc);

assert(mtl == &ompi_mtl_psm2.super);

PSM2_MAKE_MQTAG(comm->c_index, comm->c_my_rank, tag, mqtag);

ret = ompi_mtl_datatype_pack(convertor,

&mtl_psm2_request.buf,

&length,

&mtl_psm2_request.free_after);

if (length >= 1ULL << sizeof(uint32_t) * 8) {

opal_show_help("help-mtl-psm2.txt",

"message too big", false,

length, 1ULL << sizeof(uint32_t) * 8);

return OMPI_ERROR;

}

// 前边是pack

mtl_psm2_request.length = length;

mtl_psm2_request.convertor = convertor;

mtl_psm2_request.type = OMPI_mtl_psm2_ISEND;

if (OMPI_SUCCESS != ret) return ret;

if (mode == MCA_PML_BASE_SEND_SYNCHRONOUS)

flags |= PSM2_MQ_FLAG_SENDSYNC;

err = psm2_mq_send2(ompi_mtl_psm2.mq,

psm2_endpoint->peer_addr,

flags,

&mqtag,

mtl_psm2_request.buf,

length);

if (mtl_psm2_request.free_after) {

free(mtl_psm2_request.buf);

}

return err == PSM2_OK ? OMPI_SUCCESS : OMPI_ERROR;

}

到了这里就没法继续追了,psm2_mq_send2是Performance Scaled Messaging 2里的函数,1

2psm2_error_t psm2_mq_send2 (psm2_mq_t mq, psm2_epaddr_t dest,

uint32_t flags, psm2_mq_tag_t *stag, const void *buf, uint32_t len)

发送阻塞 MQ 消息。 发送阻塞 MQ 消息的函数,该消息在本地完成,并且可以在返回时修改源数据。

Parameters:

- mq: Matched Queue handle.

- dest: Destination EP address.

- flags: Message flags, currently:

- PSM2_MQ_FLAG_SENDSYNC tells PSM2 to send the message synchronously, meaning that the message is not sent until the receiver acknowledges that it has matched the send with a receive buffer.

- stag: Message Send Tag pointer.

- buf: Source buffer pointer.

- len: Length of message starting at buf.

TCP

看代码里有tcp和rdma的实现,但是没找到怎么到tcp这块的,看到注释说是动态加载模块,可能是通过配置实现选择TCP或者RDMA的?以下缕一下TCP的执行过程。

从btl_tcp_component.c开始,这个结构保存了网络通信的信息,同时支持IPv4和IPv6,可以看到TCP通信时数据是以帧frag为单位的。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54struct mca_btl_tcp_component_t {

mca_btl_base_component_3_0_0_t super; /**< base BTL component */

uint32_t tcp_addr_count; /**< total number of addresses */

uint32_t tcp_num_btls; /**< number of interfaces available to the TCP component */

unsigned int tcp_num_links; /**< number of logical links per physical device */

struct mca_btl_tcp_module_t **tcp_btls; /**< array of available BTL modules */

opal_list_t local_ifs; /**< opal list of local opal_if_t interfaces */

int tcp_free_list_num; /**< initial size of free lists */

int tcp_free_list_max; /**< maximum size of free lists */

int tcp_free_list_inc; /**< number of elements to alloc when growing free lists */

int tcp_endpoint_cache; /**< amount of cache on each endpoint */

opal_proc_table_t tcp_procs; /**< hash table of tcp proc structures */

opal_mutex_t tcp_lock; /**< lock for accessing module state */

opal_list_t tcp_events;

opal_event_t tcp_recv_event; /**< recv event for IPv4 listen socket */